Blue/Green Deployment in Kubernetes with Terraform

When development teams want to upgrade or deploy new versions of an application, they often face common challenges that impact user experience and application availability. In fact, the average cost of downtime is $5,600 per minute, according to Gartner. These challenges include:

-

Downtime: When upgrading an application, there’s often a period when the service is unavailable. This downtime can affect user experience, especially for necessary apps. For example, when upgrading your Nginx Ingress in a Kubernetes cluster from version 1.10 to 1.12, you may encounter issues if the upgrade process causes disruptions. If the upgrade requires you to also upgrade the Kubernetes cluster to version 1.31, any downtime during this transition could lead to temporary unavailability of the Ingress controller. This would impact traffic routing to your applications, causing service interruptions for users.

-

Breaking Changes: New versions of applications could contain changes that break the current system. Imagine a cloud-based API platform that provides services to various applications. If an update removes or changes critical API endpoints that developers rely on, the applications using those APIs might experience failures or errors. This would lead to disruptions for businesses relying on the platform, affecting their operations.

-

Inconsistent Environments: When deploying updates across multiple environments like production and staging, differences between these environments can cause issues. For example, a new feature might work perfectly in the staging environment but fail in production due to differences in configurations or missing updates. This could lead to confusion and delays in identifying and fixing the issue.

These are the problems teams often face when upgrading or deploying applications, leading to service disruptions, poor user experiences, and operational delays. To address these issues, it’s important to adopt strategies that ensure easy updates without affecting the user experience.

Solution Strategy

To overcome the challenges of downtime, application failures, and inconsistent environments during application upgrades, two common strategies can be implemented: In-place Rolling Updates and Blue/Green Deployments. Both strategies ensure an easy transition while minimizing downtime and using infrastructure resources effectively.

In-place Rolling Update in Kubernetes

In an in-place rolling update, Kubernetes gradually replaces old versions of your application with new ones, making sure that some instances of the application are always running while the upgrade happens.

Kubernetes will not update all the pods at a time, keeping the application available to users during the upgrade. It makes sure that a minimum number of pods are always running, even as the application is being updated. For a web app with ten pods running, Kubernetes will not update all pods at a time. During the update, users will still be able to access the app via the remaining nine pods.

It helps avoid downtime since all pods are not updated at the same time, and the previous version ones remain running until the new version ones are in ready state. If something goes wrong with updating a pod, Kubernetes will try to switch back to the old version of that pod to keep everything working. The update for the other pods will still continue, so the upgrade doesn’t stop, and the problem with the failed pod would cause service disruptions until it is resolved.

Blue/Green Deployments in Kubernetes

A Blue/Green Deployment is a strategy where two identical environments, also called Blue and Green environments, are used. The Blue environment hosts the current stable version of the application, and the Green environment runs the new version. After verifying that the new version is working well, traffic is switched from the Blue environment to the Green environment.

Suppose your e-commerce platform is running in the Blue environment, and you’re introducing a new feature like personalized recommendations in the Green environment. Once Green is fully configured and tested, you can switch all users to the Green environment. If any issues arise, you can simply roll back to Blue with very little impact on users.

It helps minimize downtime because traffic can be switched between environments with no impact on users. If something fails, you can revert to the stable Blue environment, making it a safer choice for production deployments.

Both Rolling Updates and Blue/Green Deployments have their own pros and cons, so the right strategy depends on your application requirements, risk tolerance, and the need for uptime. Let’s look at Blue/Green deployment to minimize downtime and manage risk during upgrades.

Overview of Blue/Green Deployment on Kubernetes

In this section, we will explore the Blue/Green Deployment strategy in Kubernetes. We’ll cover how the flow of the application in Blue/Green deployment works and the benefits it brings.

How does Blue/Green Deployment work?

In Blue/Green Deployment, you first prepare the new version of your application in a separate environment, also called the Green environment while the existing version continues to run in the Blue environment. This setup can be done within a local Kubernetes cluster or on a cloud-based solution as well. Once the Green environment is fully ready and tested, traffic is routed from the Blue environment to the Green environment. This approach enables you to validate the new version in a fully functional environment without impacting users on the Blue environment.

For a video streaming platform, the Blue environment continues to serve live streams and content during an upgrade. Once the Green environment is fully verified, all user traffic is gradually redirected to it, ensuring uninterrupted access and viewing experience.

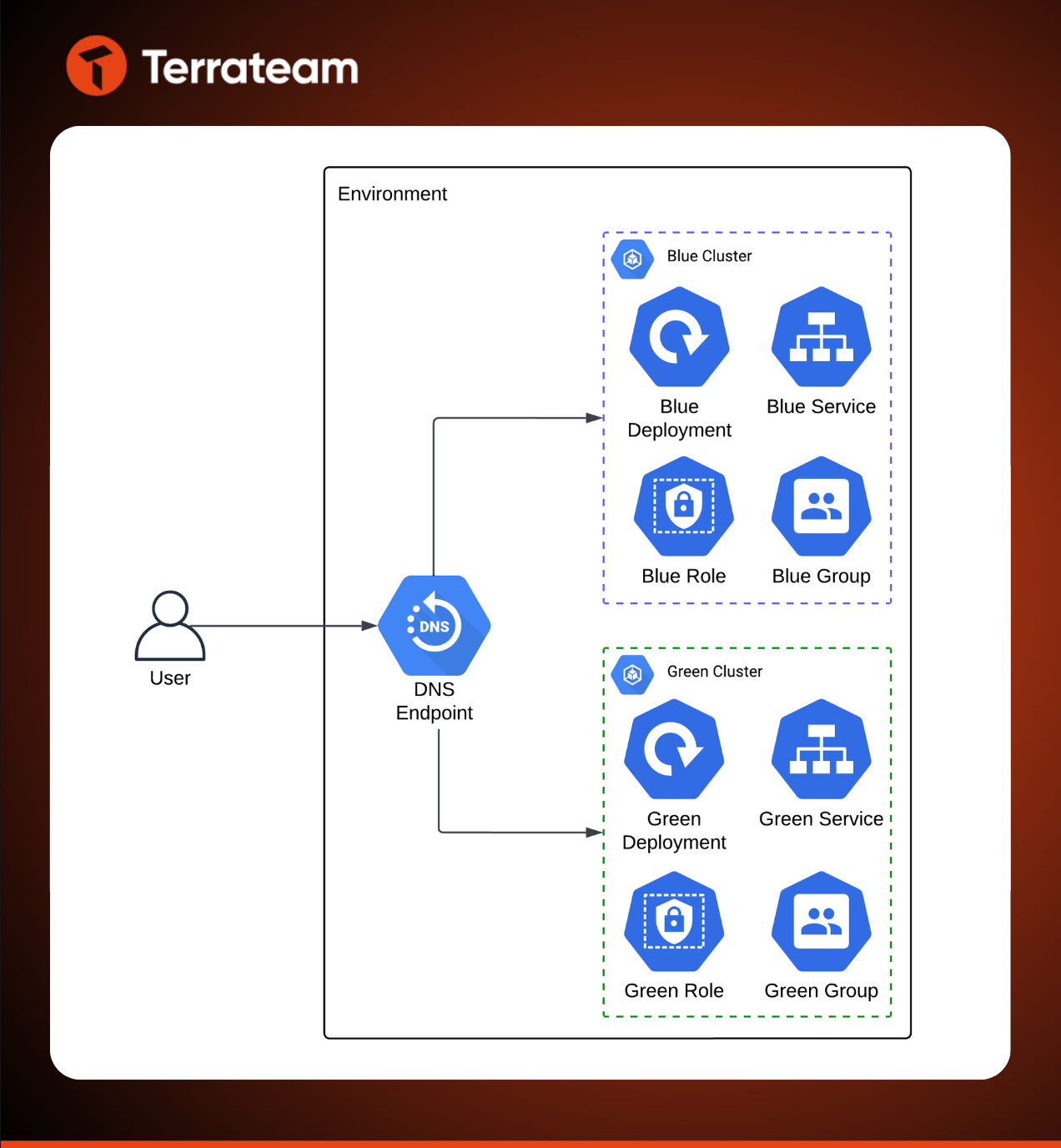

To better understand this concept, here’s a diagram showing how Blue/Green deployment works.

Here’s a breakdown of the flow represented in the diagram:

- The user accesses the application through a DNS Endpoint. The DNS directs user traffic to the appropriate environment based on configuration.

- The Blue Cluster is the current live environment, running the stable version of the application.

- The Green Cluster is the staging environment, prepared with the updated application version.

- During a deployment, traffic initially flows to the Blue environment. After the Green environment is fully tested and verified, traffic is redirected to the Green environment without disruption to users.

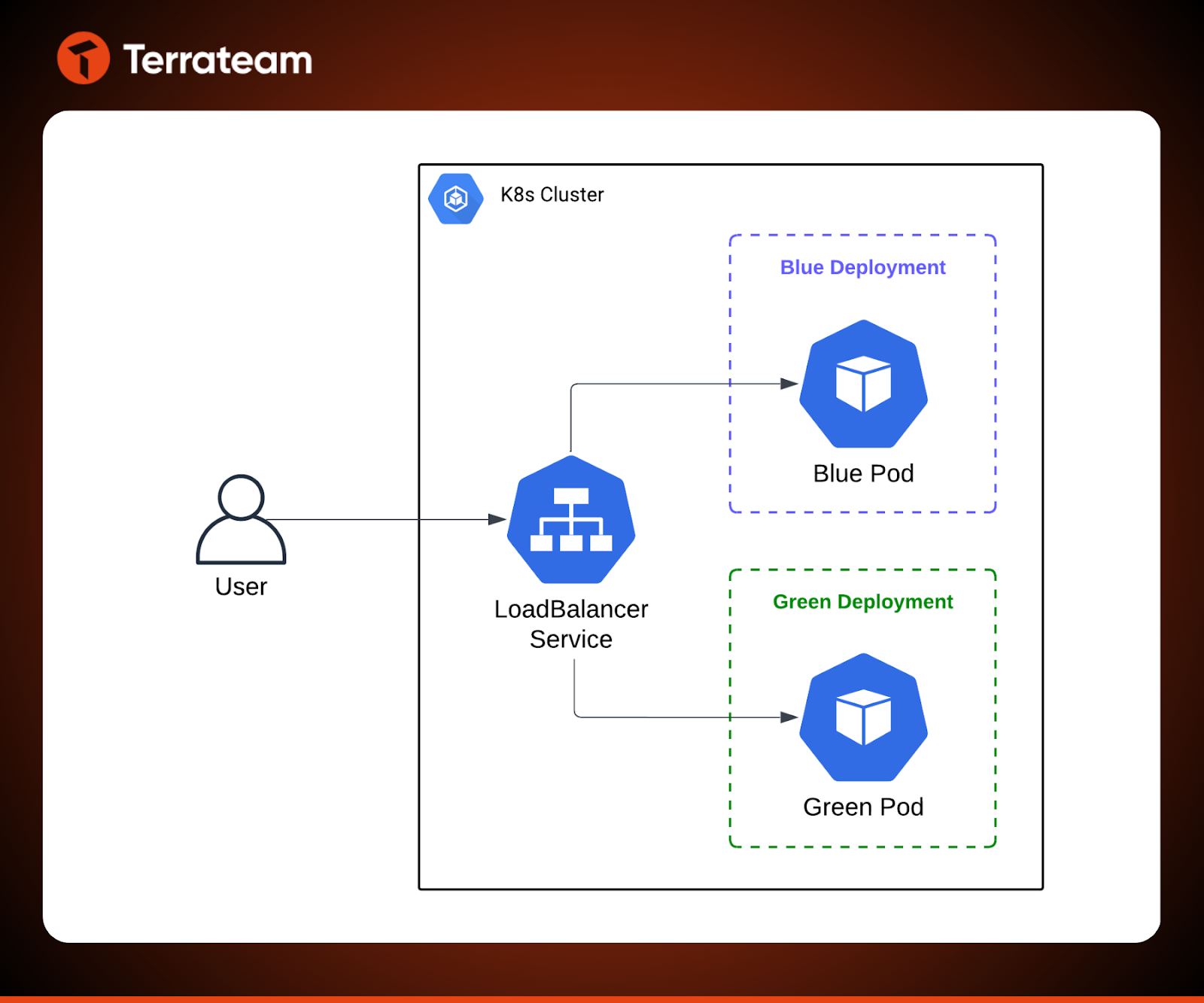

Another way to implement Blue/Green deployment is within a single cluster. In this setup, you create separate blue and green deployments inside the same cluster. By using a LoadBalancer service, you can switch external traffic between the two deployments. Here's a diagram to better visualize how Blue/Green deployment works in this scenario:

Here’s an explanation of the flow shown in the diagram:

- The user makes a request to the application through a LoadBalancer Service.

- Both the Blue and Green deployments are hosted within the same Kubernetes cluster.

- The Blue Deployment represents the live version of the application.

- The Green Deployment is the new version of the application that is being prepared and tested.

- The LoadBalancer Service manages the distribution of traffic.

In this guide, we will focus on the single-cluster approach for simplicity. Now let's compare Blue/Green with Canary deployment, which is another popular strategy for upgrading your application to a new version.

Comparing Blue/Green and Canary Deployments

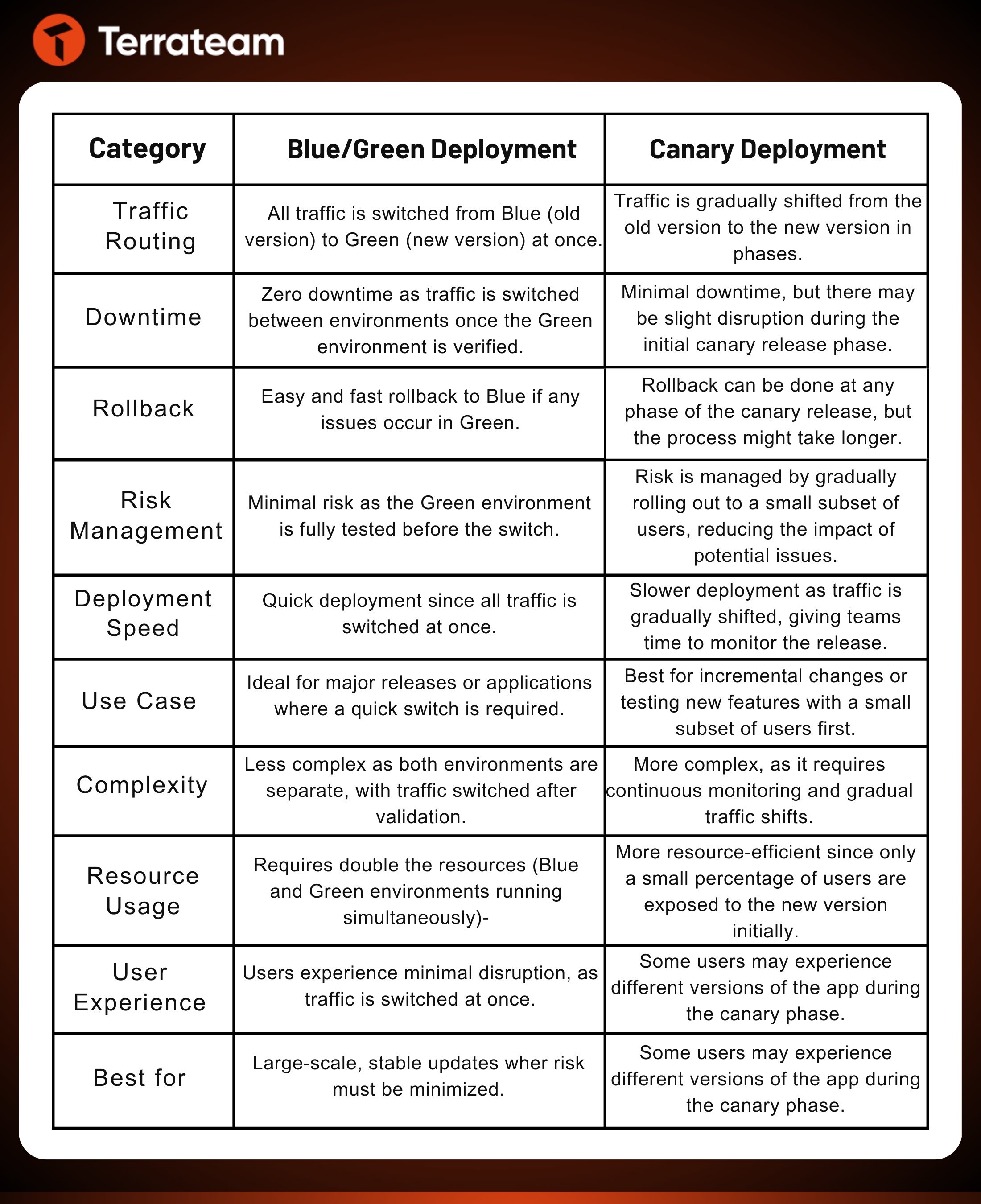

Before we talk about the benefits of Blue/Green deployment, let’s first compare it with another common method called Canary Deployment. Both are used to reduce risks and downtime during updates, but they work in different ways.

The table below highlights the key differences between Blue/Green and Canary deployments:

Canary deployments involve gradually rolling out changes to a small subset of users which allows for real-time monitoring and incremental updates. Blue/Green deployments, however, include switching all traffic to a new environment at once; this makes them better for large updates that need to be done quickly with a full switch to the new version. The choice depends on the application type, risk tolerance, and available infrastructure resources.

Benefits of Blue/Green Deployment

Blue/Green deployment offers several benefits, particularly when it comes to ease of rollback and minimizing downtime during upgrades. Some of the major benefits of Blue/Green Deployment include:

- Minimal Disruption: Since you can switch traffic between two environments, there's negligible downtime during the upgrade process. Users maintain access to the application throughout.

- Fast Rollback: If the application fails in the new version, rolling back to the previous stable Blue environment is easy and fast. This minimizes risk and impact on the user.

- Environment Isolation: By using two separate environments, bugs and issues in the new version can be isolated and fixed without affecting the production system actively serving users.

- Simplified Testing: You can run your unit and integration tests on the Green environment in parallel with the active Blue environment, ensuring that everything works before promoting the Green environment to production.

Now, let’s begin with the step-by-step guide to set up blue/green deployments in your Kubernetes cluster.

Walkthrough: Blue/Green using Kubernetes Deployments with Terraform

This guide will walk you through the process of setting up a Blue/Green Deployment strategy using Kubernetes and Terraform. You’ll create two separate environments, Blue and Green, in your Kubernetes cluster, manage the traffic routing with a Load Balancer service, and understand how to switch traffic between these environments with minimal downtime. We’ll also cover how to quickly roll back to the Blue environment in case something goes wrong.

Prerequisites

Before starting, ensure you fulfill these requirements:

- Terraform is installed on your local machine.

- A Kubernetes cluster running (can be local using Minikube, or on cloud platforms like AWS EKS, GKE, or AKS).

- kubectl to interact with your Kubernetes cluster.

Create the Blue and Green Deployment with Terraform

You can create two separate Kubernetes deployment resource, one for Blue environment and one for Green environment using Terraform.

Kubernetes Terraform Provider Configuration

First, set up your Terraform provider to manage Kubernetes resources. Create a main.tf file and configure your Kubernetes provider and initialize the configuration with terraform init command.

provider "kubernetes" {

host = var.kubernetes_host

cluster_ca_certificate = base64decode(var.kubernetes_ca_certificate)

token = var.kubernetes_token

}

Define the Blue Deployment

Create a Kubernetes deployment for the Blue environment. This is where your current stable version will reside. We are using a nignx:stable image in this guide as the stable blue deployment, and you can replace it with the stable version of your application image.

resource "kubernetes_deployment" "blue" {

metadata {

name = "blue-app"

}

spec {

replicas = 3

selector {

match_labels = {

app = "blue"

}

}

template {

metadata {

labels = {

app = "blue"

}

}

spec {

container {

name = "blue-app-container"

image = "nginx:stable"

port {

container_port = 80

}

}

}

}

}

}

Define the Green Deployment

Next, create the Green deployment, which will hold the new version of the application. We’ve used the nginx:latest as the new Green deployment. You can change the image with the latest version of your application. \

resource "kubernetes_deployment" "green" {

metadata {

name = "green-app"

}

spec {

replicas = 3

selector {

match_labels = {

app = "green"

}

}

template {

metadata {

labels = {

app = "green"

}

}

spec {

container {

name = "green-app-container"

image = "nginx:latest"

port {

container_port = 80

}

}

}

}

}

}

Set Up the Load Balancer Service

To manage traffic between the Blue and Green environments, create a Kubernetes service with a Load Balancer. Initially, it will route all traffic to the Blue environment within your local Kubernetes cluster. \

resource "kubernetes_service" "loadbalancer" {

metadata {

name = "app-service"

}

spec {

selector = {

app = "blue"

}

port {

port = 80

target_port = 80

}

type = "LoadBalancer"

}

}

Apply the Terraform Configuration

Run terraform apply to create the resources in your Kubernetes cluster. Terraform will set up both the Blue and Green environments and the Load Balancer service.

Shift the Traffic to Green Deployment

Once you’ve tested your green environment, you can switch your traffic automatically from blue to green deployment without any downtime by changing the configuration of the load balancer service.

kubectl patch service app-service -p '{"spec":{"selector":{"app":"green"}}}'

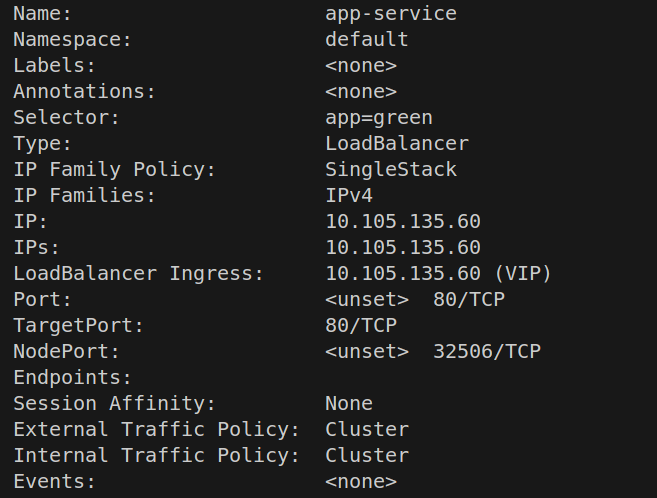

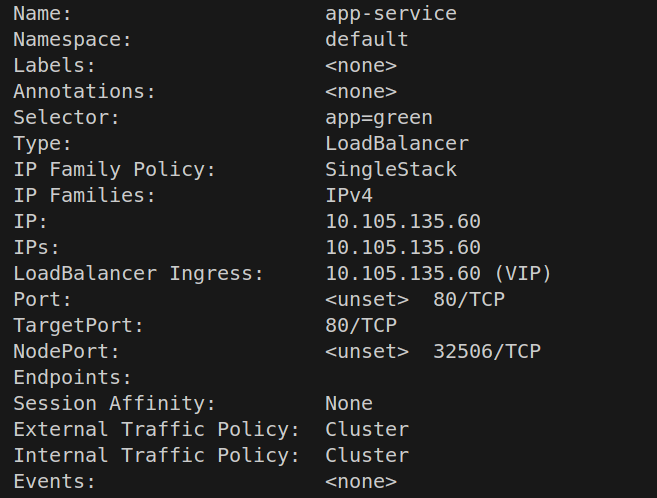

You can verify the change by describing the LoadBalancer service in Kubernetes.

kubectl describe service app-service

Check out the “Selector” property in the output to confirm the change. The value should be app=green instead of app=blue

Delete the Blue Deployment

You can delete the blue deployment optionally once the traffic is fully transferred to save costs.

kubectl delete deployment blue-app

Rollback Plan

In case of a failure after switching traffic to the Green environment, it’s important to have a rollback plan. Here’s how you can revert to the Blue environment using your Terraform Kubernetes provider.

Switch Traffic Back

You can use Kubernetes to update the service selector to point back to the Blue environment. Modify the Load Balancer service to point to the Blue deployment:

resource "kubernetes_service" "loadbalancer" {

metadata {

name = "app-service"

}

spec {

selector = {

app = "blue"

}

port {

port = 80

target_port = 80

}

type = "LoadBalancer"

}

}

Run terraform apply again to update the Load Balancer’s selector and revert traffic back to the Blue environment.

Ensure that the Green environment is no longer receiving traffic and that the Blue environment is back in service. You can use the kubectl describe svc command to check status of the Load Balancer and see which environment is receiving traffic.

This approach minimizes user impact by allowing you to quickly switch traffic back to the stable version without requiring a full redeployment or downtime.

Challenges/Drawbacks of using Blue/Green deployment on Kubernetes

While Blue/Green Deployment offers significant benefits, including almost zero downtime and easy rollback, there are challenges and drawbacks to consider when using Blue/Green deployment strategy:

1. Resource Intensive: In Blue/Green deployment, you need to have two separate setups, Blue and Green, running at the same time. This means you’ll need twice the infrastructure resources, servers, storage, and applications. It can increase costs because you’re running both setups at once, which can be expensive.

2. Complexity in Managing State: If your application involves persistent data or shared resources like a database, managing state between the two environments is difficult. A sudden switch might lead to data inconsistencies or issues when the Green environment is deployed but hasn’t fully integrated with the state of the Blue environment. For example, for a content management system, the Blue environment might be storing data in a database, while the Green environment is deployed with updated database schemas. If the data migration is not handled carefully, it could lead to errors or loss of data.

3. Traffic Routing Issues: Though Kubernetes allows you to easily switch traffic between environments, managing traffic flow efficiently can become challenging when scaling. Deciding when to shift 100% of the traffic to the new environment, and handling edge cases where some users experience the Blue version and others experience the Green version, requires careful planning and testing. For instance, a SaaS application might face inconsistent user experience if certain requests are sent to Blue and others to Green, especially when the new version has slight behavioral changes.

Now, managing these Blue/Green deployments in production Kubernetes clusters can become a bit complicated when multiple teams like frontend, backend, and operations work together without clear coordination.

For example, the backend team might update the Green environment with a new API, but the Green environment isn’t in use yet, so it’s not fully ready for traffic. Meanwhile, the operations team might change the LoadBalancer to start sending traffic to Green. If these changes aren’t timed right, the traffic could go to the Green environment before it’s fully prepared, causing disruptions. Similarly, differences in configuration between staging and production environments often lead to issues when the new version is rolled out, even if it worked during testing.

These problems come from not having a proper system to handle these updates, approvals, and workflows across multiple teams. Without a transparent process, deployments can get delayed due to mismatched configurations, which may increase the chances of downtime and make the whole process less reliable. That’s where Terrateam comes in.

Implementing Blue/Green Deployment Strategy using Terraform with Terrateam

Terrateam is a Terraform and OpenTofu GitOps tool that connects directly with your GitHub repository, making it simple for multiple teams to manage their IaC, collaborate, and automate deployments. Since everyone works on the same GitHub repo, all updates and changes are tracked in pull requests. Team members are notified via emails or GitHub notifications, making sure that everyone stays updated with the latest changes within the infrastructure. Learn more about Terrateam's features to see how it supports DevOps teams with GitOps workflows.

It’s really easy to set up Terrateam with your GitHub repo or organization. Get started.

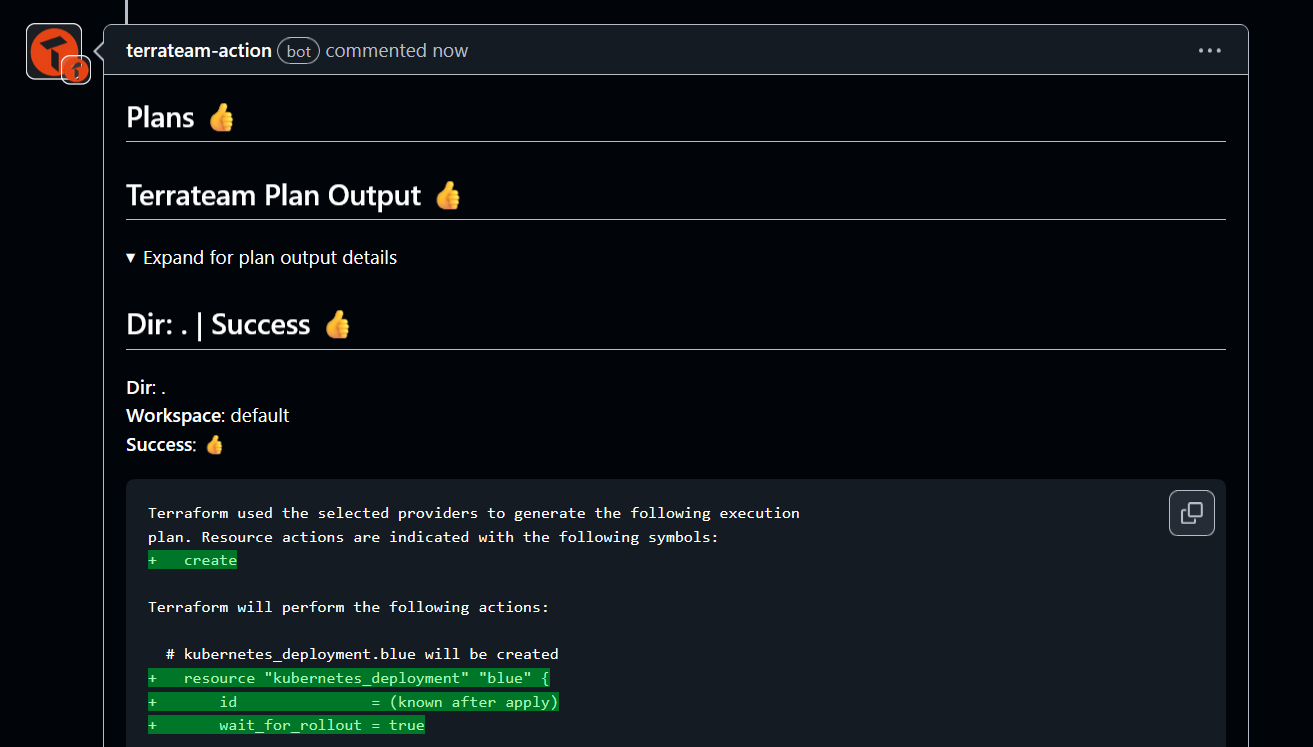

Once installed, you can integrate your Terraform code with Terrateam using its GitHub integration. Start by creating a new branch in your repo and adding a main.tf file with your Terraform code. Open a pull request, and Terrateam will automatically trigger workflows to validate, format, and check your code using its pre-configured hooks.

This GitOps-driven approach ensures that all changes are reviewed, approved, and applied directly from the pull request. By managing everything through GitHub, your team maintains full visibility and control over every update.

With Terrateam, your whole team works directly in GitHub. All updates and changes happen through pull requests in the same repository. Terrateam integrates with GitHub to trigger workflows, validate changes, and notify team members. By using this approach, you'll be able to efficiently deploy Kubernetes clusters and manage Blue/Green deployments with more visibility. This keeps the process more smooth, avoids any kind of mistakes, and makes sure that the deployments are faster and more reliable.

Want to make your deployments easier? Start with Terrateam and keep your process clear, organized, and fast.

Conclusion

By now, you should have an understanding of how Blue/Green deployments help reduce downtime by running two environments and switching traffic smoothly. Kubernetes simplifies this by allowing you to manage deployments, pods, and services easily, ensuring smooth transitions between environments. Terraform makes it easier to define and automate the entire setup as code, so you can quickly create, update, and manage your Kubernetes deployment resource in both the Blue and Green environments.